List of participants of MSU Video Alignment and Retrieval Benchmark Suite

TMK

- Adapted the kernel descriptor framework of Bo (paper) to sequences of frames. Proposed a query expansion (QE) technique that automatically aligns the videos deemed relevant for the

query.

- Added to the benchmark by MSU G&M Lab

VideoIndexer

- Detect scene changes, split the video on scenes. Align scenes, than align frames respectively.

- Added to the benchmark by MSU G&M Lab

- Links: paper

Time shift metric in VQMT tool

- Use PSNR to detect relevant frames.

- Added to the benchmark by MSU G&M Lab

- Links: project

Time shift metric in VQMT3D tool

- Use motion vectors and RANSAC to measure time shift between frames.

- Added to the benchmark by MSU G&M Lab

- Links: project

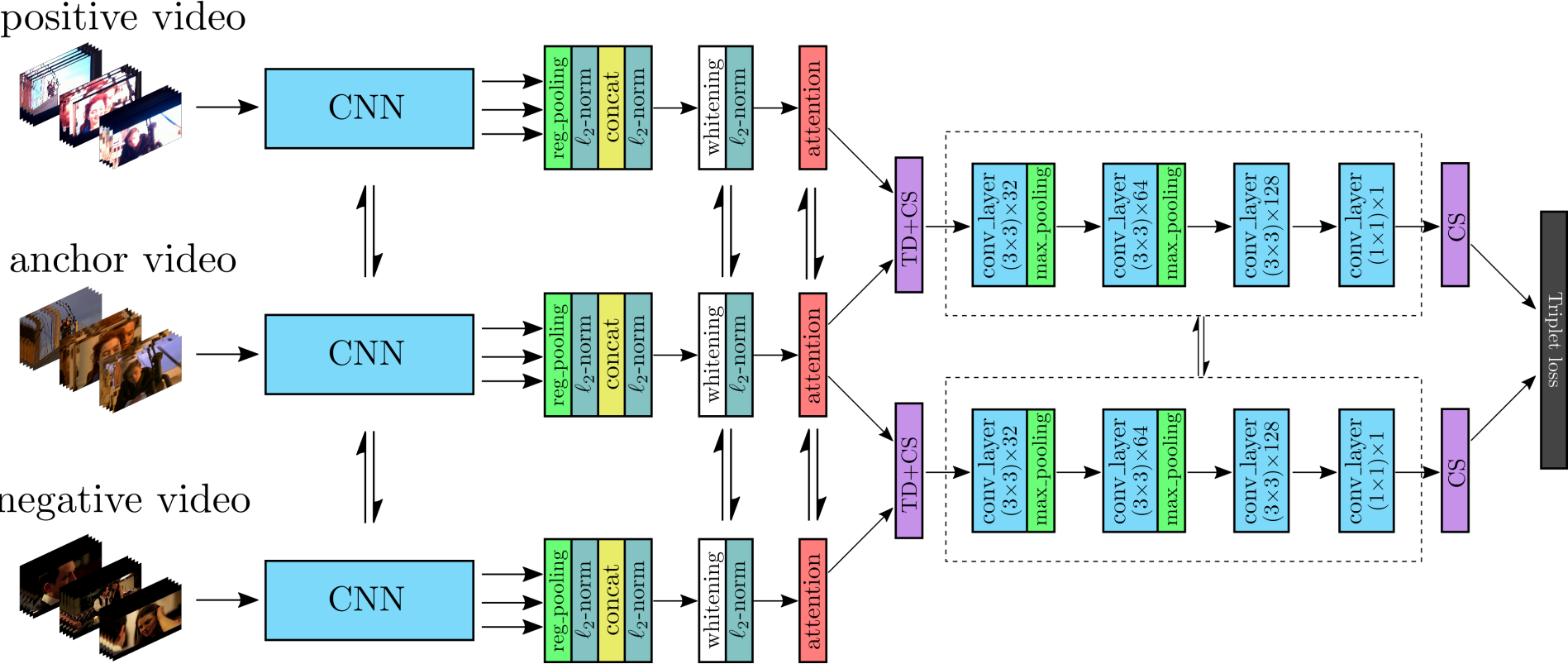

ViSiL

- Use RMAC descriptors to estimate frame-to-frame and video-to-video similarity.

- Added to the benchmark by MSU G&M Lab

- This method was modified by MSU to suit the benchmark suite tasks: we measure only frame-to-frame similarity, then we make synchronization map by taking maximum values in the resulting cost matrix.

ViSiL_SCD

- Use ViSiL architecture to compute frames features. Detect scene changes by the features and split videos on scenes. Match the scenes by video-to-video similarity and then make synchronization map by taking maximum values in the frame-to-frame similarity matrix.

- Added to the benchmark by MSU G&M Lab

See Also

PSNR and SSIM: application areas and criticism

Learn about limits and applicability of the most popular metrics

Video Saliency Prediction Benchmark

Explore the best video saliency prediction (VSP) algorithms

LEHA-CVQAD Video Quality Metrics Benchmark

Explore newest Full- and No-Reference Video Quality Metrics and find the most appropriate for you.

Learning-Based Image Compression Benchmark

The First extensive comparison of Learned Image Compression algorithms

Super-Resolution for Video Compression Benchmark

Learn about the best SR methods for compressed videos and choose the best model to use with your codec

Video Colorization Benchmark

Explore the best video colorization algorithms

Site structure

-

MSU Benchmark Collection

- Video Saliency Prediction Benchmark

- LEHA-CVQAD Video Quality Metrics Benchmark

- Learning-Based Image Compression Benchmark

- Super-Resolution for Video Compression Benchmark

- Video Colorization Benchmark

- Defenses for Image Quality Metrics Benchmark

- Super-Resolution Quality Metrics Benchmark

- Deinterlacer Benchmark

- Metrics Robustness Benchmark

- Video Upscalers Benchmark

- Video Deblurring Benchmark

- Video Frame Interpolation Benchmark

- HDR Video Reconstruction Benchmark

- No-Reference Video Quality Metrics Benchmark

- Full-Reference Video Quality Metrics Benchmark

- Video Alignment and Retrieval Benchmark

- Mobile Video Codecs Benchmark

- Video Super-Resolution Benchmark

- Shot Boundary Detection Benchmark

- The VideoMatting Project

- Video Completion

- Codecs Comparisons & Optimization

- VQMT

- MSU Datasets Collection

- Metrics Research

- Video Quality Measurement Tool 3D

- Video Filters

- Other Projects