MSU Video Alignment and Retrieval Benchmark Suite

Explore the best algorithms in different video alignment tasks

Konstantin Kozhemyakov

What’s new

- 22.10.2021 Public beta-version Release

- 06.10.2021 Alpha-version Release

Key features of the Benchmark Suite

- The most diverse dataset for alignment of near-duplicate videos:

- 560 test pairs in each Benchmark with a total duration of ~2 million frames

- Combinations of 13 frequent distortions obtained due to human/machine video editing and processing

- Test your method on our Benchmarks:

- Local time distortions: occur due to video processing (transmitting, compressing with low bitrate etc.)

- Global time distortions: occur due to video editing

- Mixed version for versatility testing on both distortion types

- Find the best method for your near-duplicate video alignment requirements:

- Each Benchmark contains three distortion presets representing use cases with different complexity

We appreciate new ideas. Please, write us an e-mail to aligners-benchmark@videoprocessing.ai

Evaluation

You can choose the preset on which the algorithms were tested and sort charts by the algorithm.

NOTE: The same number of test pair on different charts does not mean the same test pair because of sorting the results by algorithm.

Local Time Distortions

Leaderboard

| Name | Accuracy 0 frames error |

Accuracy 3 frames error |

Accuracy 10 frames error |

|---|

Charts

Highlight the plot region where you want to zoom in

Global Time Distortions

Leaderboard

| Name | F1-score | Precision | Recall |

|---|

Charts

Highlight the plot region where you want to zoom in

Mixed Time Distortions

Leaderboard

| Name | F1-score | Precision | Recall |

|---|

Charts

Highlight the plot region where you want to zoom in

Methodology

Video sequences selection

Content diversity in test sequences is necessary for running algorithms in near-realistic conditions. To provide this diversity the following steps have been undertaken.

We identified two families of cases: processes during which temporary distortions could occur, i.e. video editing, streaming, capturing from different rigidly-connected cameras etc., and content in test videos which is difficult to align, i.e. talking head, recurring events (such as football matches, formula-1 racing), camera on a tripod (small amount of movement) etc. Then, we collected ~2000 videos from Vimeo from each taxonomic category to ensure unbiased video selection and sufficient coverage in terms of semantics and context presented in the video.

Finally, SI/TI features were calculated for each video and for each class we mapped videos to use cases mentioned above.

We chose videos that sufficiently cover SI/TI space and all the identified use cases and got 56 source videos.

Dataset

Our dataset is constantly updated. Now we have 56 source video sequences with total duration of 195,411 frames. Resolution of all video sequences is 1920×1080. FPS ranges from 16 to 60.

Benchmarks and presets

We decided to divide the problem of video alignment into three logical parts: local time distortions (occurs due to video processing), global time distortions (occurs due to video editing) and mixed time distortions (for versatility testing on both distortion types). Hence, our benchmark suite contains three benchmarks.

| Benchmark | Time distortions | Metric |

|---|---|---|

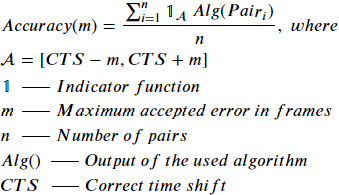

| Local time distortions | Drop frames | Accuracy(%) of correctly localized time-shifts of initial frames depending on maximum accepted error in frames |

| Duplicate frames | ||

| Freeze frames | ||

| Time shift | ||

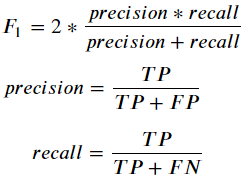

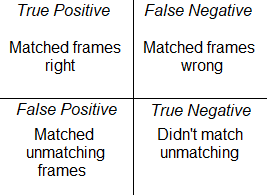

| Global time distortions | Fragment insertion | F1-score |

| Drop fragment | ||

| Cut the video | ||

| Mixed time distortions | All time distortions |

Metrics are calculated as follows:

Each benchmark consists of four presets for a more precise understanding of the pros and cons of algorithms.

| Preset | Used distortions |

|---|---|

| Light | Time distortions |

| Medium geometric | Time distortions |

| Geometric distortions | |

| Medium color | Time distortions |

| Color distortions | |

| Hard | All distortions |

Distortions and distortion distribution

| Distortion type | Distortion | Description |

|---|---|---|

| Time distortions | Drop frames | First, last or random frame number < fps, drop/duplicate 1, 2 or random up to 10 frames |

| Duplicate/Freeze frames | ||

| Time shift | Crop up to 11 frames from the start | |

| Fragment insertion | Insert fragment from another video | |

| Drop fragment | Drop random fragment | |

| Cut the video | Cut up to 3/4 of the entire video | |

| Color distortions | Add noise | Add gaussian noise, sigma 3 |

| Add blur | Add gaussian blur, sigma 1.5 | |

| Random brightness&contrast | Random brignthess decrease from -10% up to -25%, contrast increase from 5% up to 30% |

|

| Geometric distortions | Add logo | Logo or news title. Covers max 300px from bottom/top/left/right |

| Add subtitles | English language. Covers max 250px from bottom or top | |

| Crop | Crop 2/4/8 px from right and bottom | |

| Scale | Scale up to 110% | |

| Add black bars | Crop to a ratio of 4:3 or 21:9. Then return to 16:9 by adding black bars |

Contacts

For questions and propositions, please contact us: aligners-benchmark@videoprocessing.ai

MSU Video Quality Measurement Tool

Widest Range of Metrics & Formats

- Modern & Classical Metrics SSIM, MS-SSIM, PSNR, VMAF and 10+ more

- Non-reference analysis & video characteristics

Blurring, Blocking, Noise, Scene change detection, NIQE and more

Fastest Video Quality Measurement

- GPU support

Up to 11.7x faster calculation of metrics with GPU - Real-time measure

- Unlimited file size

Main MSU VQMT page on compression.ru

-

MSU Benchmark Collection

- Super-Resolution Quality Metrics Benchmark

- Super-Resolution Quality Metrics Benchmark

- Video Colorization Benchmark

- Video Saliency Prediction Benchmark

- LEHA-CVQAD Video Quality Metrics Benchmark

- Learning-Based Image Compression Benchmark

- Super-Resolution for Video Compression Benchmark

- Defenses for Image Quality Metrics Benchmark

- Deinterlacer Benchmark

- Metrics Robustness Benchmark

- Video Upscalers Benchmark

- Video Deblurring Benchmark

- Video Frame Interpolation Benchmark

- HDR Video Reconstruction Benchmark

- No-Reference Video Quality Metrics Benchmark

- Full-Reference Video Quality Metrics Benchmark

- Video Alignment and Retrieval Benchmark

- Mobile Video Codecs Benchmark

- Video Super-Resolution Benchmark

- Shot Boundary Detection Benchmark

- The VideoMatting Project

- Video Completion

- Codecs Comparisons & Optimization

- VQMT

- MSU Datasets Collection

- Metrics Research

- Video Quality Measurement Tool 3D

- Video Filters

- Other Projects