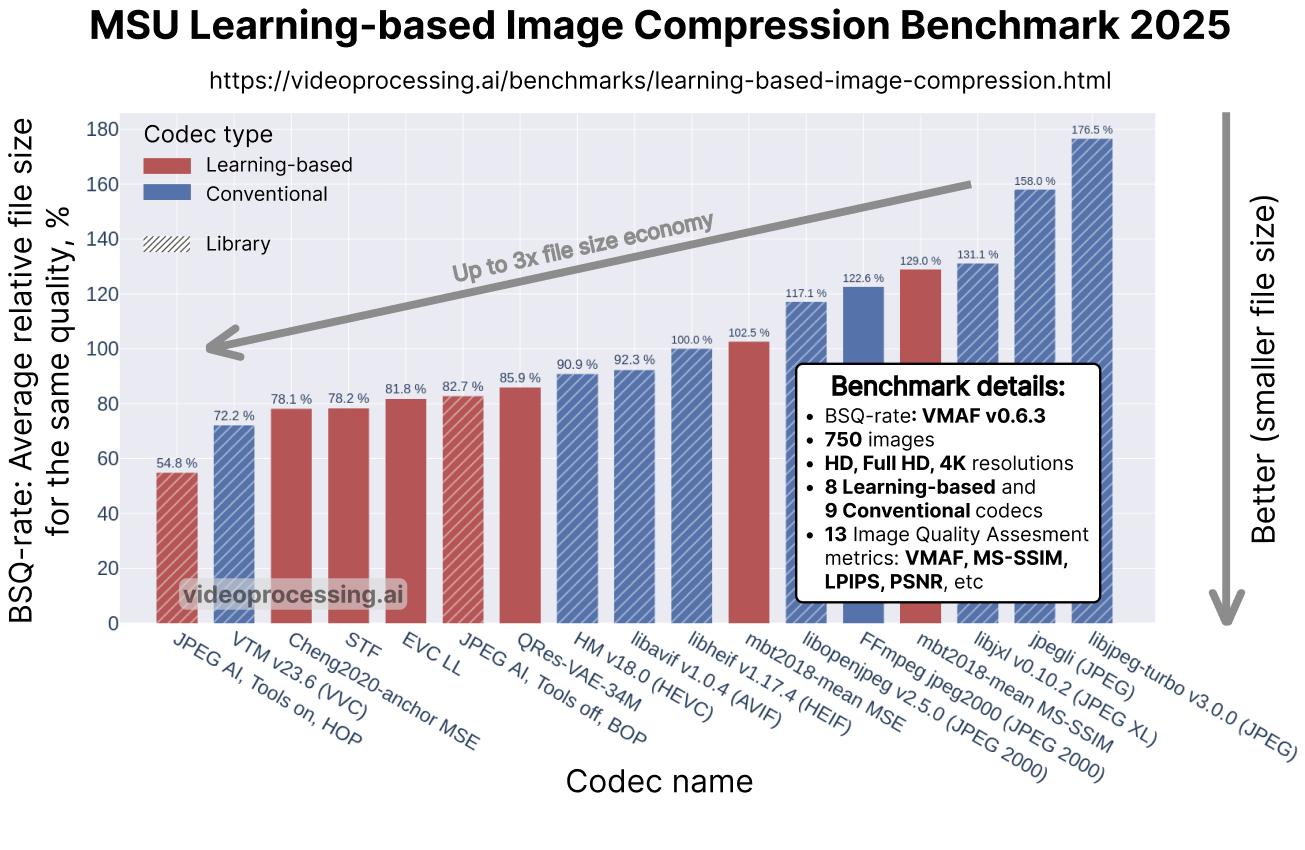

MSU Learning-based Image Compression Benchmark 2024

Explore the Best Learned Image Compression Methods

G&M Lab head: Dr. Dmitriy Vatolin

Project adviser: Dr. Dmitriy Kulikov

Measurements, analysis:

Vitaly Rylov

Roman Kazantsev

Roman Kazantsev

Diverse dataset

- Over 750 test images

- HD, Full HD and 4K resolutions

- Various content types

- Processed over 1M images to create the dataset

Large comparison

- 20 codecs tested

- 13 IQA metrics

- Subjective comparison (soon)

- JPEG AI reference codec results

Large leaderboard

- BSQ-rate for codec ranking

- Rate-Distortion curves

What’s new

- 14.04.2024 Benchmark Release!

- 14.02.2025 Added Jpeg AI.

Leaderboard

RD-curve examples

Cite us

|

@misc{rylov2024learning

title={Learning-based Image Compression Benchmark},

author={Rylov, Vitaly and Kazantsev, Roman and Vatolin, Dmitriy},

year={2024},

url={https://videoprocessing.ai/benchmarks/learning-based-image-compression.html}

}

|

Contacts

We would highly appreciate any suggestions and ideas on how to improve our benchmark.

Please contact us via e-mail: image-compression-benchmark@videoprocessing.ai.

Also, you can subscribe to updates on our benchmark:

MSU Video Quality Measurement Tool

Widest Range of Metrics & Formats

- Modern & Classical Metrics SSIM, MS-SSIM, PSNR, VMAF and 10+ more

- Non-reference analysis & video characteristics

Blurring, Blocking, Noise, Scene change detection, NIQE and more

Fastest Video Quality Measurement

- GPU support

Up to 11.7x faster calculation of metrics with GPU - Real-time measure

- Unlimited file size

Main MSU VQMT page on compression.ru

Crowd-sourced subjective

quality evaluation platform

- Conduct comparison of video codecs and/or encoding parameters

What is it?

Subjectify.us is a web platform for conducting fast crowd-sourced subjective comparisons.

The service is designed for the comparison of images, video, and sound processing methods.

Main features

- Pairwise comparison

- Detailed report

- Providing all of the raw data

- Filtering out answers from cheating respondents

Subjectify.us

See Also

PSNR and SSIM: application areas and criticism

Learn about limits and applicability of the most popular metrics

Super-Resolution Quality Metrics Benchmark

Discover 50 Super-Resolution Quality Metrics and choose the most appropriate for your videos

Super-Resolution Quality Metrics Benchmark

Discover 50 Super-Resolution Quality Metrics and choose the most appropriate for your videos

Video Colorization Benchmark

Explore the best video colorization algorithms

Video Saliency Prediction Benchmark

Explore the best video saliency prediction (VSP) algorithms

LEHA-CVQAD Video Quality Metrics Benchmark

Explore newest Full- and No-Reference Video Quality Metrics and find the most appropriate for you.

Site structure

-

MSU Benchmark Collection

- Super-Resolution Quality Metrics Benchmark

- Super-Resolution Quality Metrics Benchmark

- Video Colorization Benchmark

- Video Saliency Prediction Benchmark

- LEHA-CVQAD Video Quality Metrics Benchmark

- Learning-Based Image Compression Benchmark

- Super-Resolution for Video Compression Benchmark

- Defenses for Image Quality Metrics Benchmark

- Deinterlacer Benchmark

- Metrics Robustness Benchmark

- Video Upscalers Benchmark

- Video Deblurring Benchmark

- Video Frame Interpolation Benchmark

- HDR Video Reconstruction Benchmark

- No-Reference Video Quality Metrics Benchmark

- Full-Reference Video Quality Metrics Benchmark

- Video Alignment and Retrieval Benchmark

- Mobile Video Codecs Benchmark

- Video Super-Resolution Benchmark

- Shot Boundary Detection Benchmark

- The VideoMatting Project

- Video Completion

- Codecs Comparisons & Optimization

- VQMT

- MSU Datasets Collection

- Metrics Research

- Video Quality Measurement Tool 3D

- Video Filters

- Other Projects