MSU Super-Resolution for Video Compression Benchmark

Discover SR methods for compressed videos and choose the best model to use with your codec

Ivan Molodetskikh,

Egor Sklyarov

Diverse dataset

- H.264, H.265, H.266, AV1, AVS3 codec standards

- More than 260 test videos

- 6 different bitrates

Various charts

- Visual comparison for more than

80 SR+codec pairs - RD curves and bar charts

for 5 objective metrics - SR+codec pairs ranked by BSQ-rate

Extensive report

- 80+ pages with different plots

- 15 SOTA SR methods

and 6 objective metrics - Extensive subjective comparison

with 5300+ valid participants - Powered by Subjectify.us

Introduction

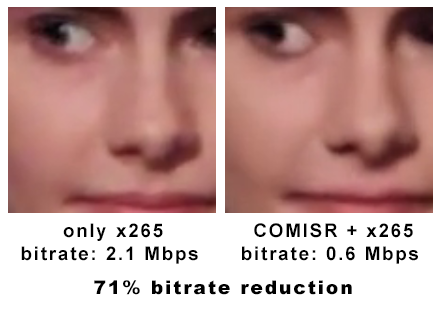

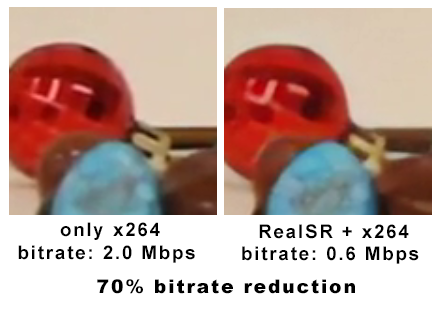

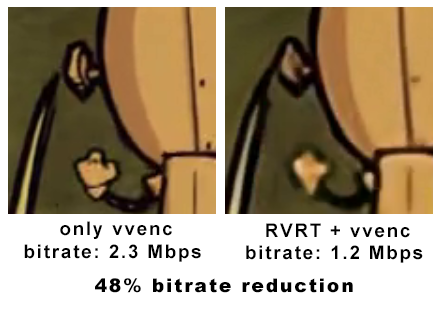

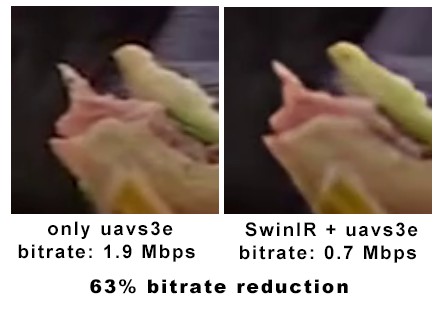

We present a comprehensive SR benchmark to test the ability of SR models to upscale and restore videos compressed by video codecs of different standards. We evaluate the perceptual quality of the restored videos by conducting a crowd-sourced subjective comparison. For every tested codec, we show which SR methods provide the most video bitrate reduction with the least quality loss.

Everyone is welcome to participate! Run your favorite super-resolution method on our compact test video and send us the result to see how well it performs. Check the “Submit your method” section to learn the details.

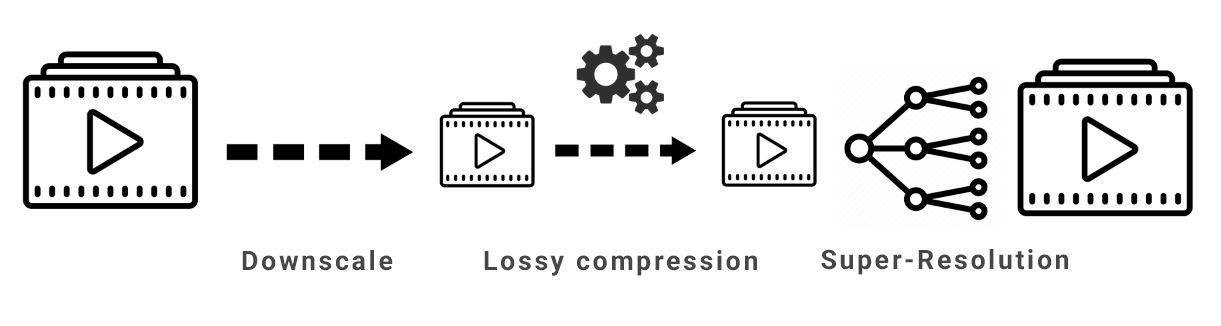

The pipeline of our benchmark

What’s new

- 26.10.2025 Added BNE-eXSR-v1.

- 20.08.2024 Our paper “SR+Codec: a Benchmark of Super-Resolution for Video Compression Bitrate Reduction” was accepted to BMVC 2024.

- 21.07.2023 Added E-MoEVRT.

- 12.06.2023 Added RKPQ-4xSR.

- 21.07.2022 Added MDTVSFA correlation to the correlation chart.

- 12.04.2022 Uploaded the results of extensive subjective comparison. See “Subjective score” in Charts section.

- 25.03.2022 Added VRT, BasicVSR++, RBPN, and COMISR. Updated Leaderboards and Visualizations sections.

- 14.03.2022 Uploaded new dataset. Updated the Methodology.

- 26.10.2021 Updated the Methodology.

- 12.10.2021 Published October Report. Added 2 new videos to the dataset. Updated Charts section and Visualizations.

- 28.09.2021 Improved the Leaderboards section to make it more user-friendly, updated the Methodology and added ERQAv1.1 metric.

- 21.09.2021 Added 2 new videos to the dataset, new plots to the Charts section, and new Visualizations.

- 14.09.2021 Public beta-version Release.

- 31.08.2021 Alpha-version Release.

Charts

In this section, you can see RD curves, which show the bitrate/quality distribution of each SR+codec pair, and bar charts, which show the BSQ-rate calculated for objective metrics and subjective scores.

Read about the participants here.

You can see the information about codecs in the methodology.

More details about metrics here.

Charts with metrics

You can choose the test sequence, the codec that was used to compress it, and the metric.

If BSQ-rate of any method equals 0, then this method should be considered much better than reference codec (codec with no SR).

Highlight the plot region where you want to zoom in.

Video: Codec: Metric:

Correlation of metrics with subjective assessment

We calculated objective metrics on the crops used for subjective comparison and calculated a correlation between the subjective and objective results. Below you can see the average correlation of metrics over all test cases.

* ERQA-MDTVSFA is calculated by multiplying MDTVSFA and ERQA values over the video.

Visualization

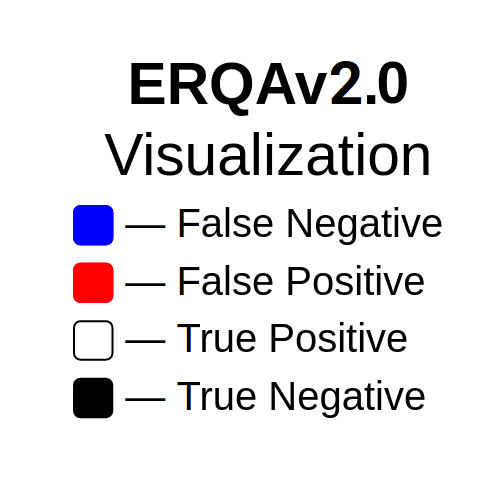

In this section, you can choose the sequence, see a cropped part of a frame from it, shifted Y-PSNR visualization, and ERQAv2.0 Visualization for this crop. For shifted Y-PSNR we find the optimal shift for Y-PSNR and apply MSU VQMT PSNR to frames with this shift. See the methodology for more information.

Video: Codec: Approximate bitrate:

Model 1: Model 2: Model 3:

Drag a red rectangle to the area that you want to see zoomed-in

GT

amq-12

ahq-11

amqs-1

Leaderboards

The table below lists all methods that took part in subjective comparison SR methods for each codec and “only codec” method, which applies the video codec to the source video without downscaling or super-resolving.

You can choose the video and the codec that was used to compress it.

All sequences compressed at an approximate bitrate of 2 Mbps.

You can sort the table by any column.

Video:

Codec:

| SR | Subjective | ERQA | LPIPS | Y-PSNR | MDTVSFA | Y-VMAF | Y-MS-SSIM |

|---|

Submit your method

Verify your method’s ability to restore compressed videos and compare it with other algorithms.

You can go to the page with information about other participants.

| 1. Download input data |

Download low-resolution input videos as sequences of frames in PNG format.

There are 2 available options: |

|

|

|

||

| 2. Apply your algorithm |

Apply your Super-Resolution algorithm to upscale frames to 1920×1080 resolution. You can also send us the code of your method or the executable file with the instructions on how to run it and we will run it ourselves. |

|

|

|

||

| 3. Send us result |

Send us an email to sr-codecs-benchmark@videoprocessing.ai

with the following information:

to the cloud drive)

|

|

You can verify the results of current participants or estimate the perfomance of your method on public samples of our dataset. Just send an email to sr-codecs-benchmark@videoprocessing.ai with a request to share them with you.

Our policy:

- We won't publish the results of your method without your permission.

- We share only public samples of our dataset as it is private.

Download the Report

Videos used in the report:

- The Alberta Retired Teachers’ Association by SAVIAN

- First Day by Outside Adventure Media

Acknowledgements:

- Subjective comparison was supported by Toloka Research Grants Program.

Cite Us

To refer to our benchmark in your work, cite our paper:

|

@article{Bogatyrev_2024_BMVC,

author={Evgeney Bogatyrev and Ivan Molodetskikh and Dmitriy S. Vatolin},

booktitle={35th British Machine Vision Conference 2024, {BMVC} 2024, Glasgow, UK, November 25-28, 2024},

publisher={BMVA},

title={SR+Codec: a Benchmark of Super-Resolution for Video Compression Bitrate Reduction},

year={2024},

url={https://bmva-archive.org.uk/bmvc/2024/papers/Paper_959/paper.pdf},

}

|

Contact Us

For questions and propositions, please contact us: sr-codecs-benchmark@videoprocessing.ai

You can subscribe to updates on our benchmark:

MSU Video Quality Measurement Tool

Widest Range of Metrics & Formats

- Modern & Classical Metrics SSIM, MS-SSIM, PSNR, VMAF and 10+ more

- Non-reference analysis & video characteristics

Blurring, Blocking, Noise, Scene change detection, NIQE and more

Fastest Video Quality Measurement

- GPU support

Up to 11.7x faster calculation of metrics with GPU - Real-time measure

- Unlimited file size

Main MSU VQMT page on compression.ru

Crowd-sourced subjective

quality evaluation platform

- Conduct comparison of video codecs and/or encoding parameters

What is it?

Subjectify.us is a web platform for conducting fast crowd-sourced subjective comparisons.

The service is designed for the comparison of images, video, and sound processing methods.

Main features

- Pairwise comparison

- Detailed report

- Providing all of the raw data

- Filtering out answers from cheating respondents

Subjectify.us

-

MSU Benchmark Collection

- Super-Resolution Quality Metrics Benchmark

- Super-Resolution Quality Metrics Benchmark

- Video Colorization Benchmark

- Video Saliency Prediction Benchmark

- LEHA-CVQAD Video Quality Metrics Benchmark

- Learning-Based Image Compression Benchmark

- Super-Resolution for Video Compression Benchmark

- Defenses for Image Quality Metrics Benchmark

- Deinterlacer Benchmark

- Metrics Robustness Benchmark

- Video Upscalers Benchmark

- Video Deblurring Benchmark

- Video Frame Interpolation Benchmark

- HDR Video Reconstruction Benchmark

- No-Reference Video Quality Metrics Benchmark

- Full-Reference Video Quality Metrics Benchmark

- Video Alignment and Retrieval Benchmark

- Mobile Video Codecs Benchmark

- Video Super-Resolution Benchmark

- Shot Boundary Detection Benchmark

- The VideoMatting Project

- Video Completion

- Codecs Comparisons & Optimization

- VQMT

- MSU Datasets Collection

- Metrics Research

- Video Quality Measurement Tool 3D

- Video Filters

- Other Projects