MSU Shot Boundary Detection Benchmark — selecting the best plugin

Comprehensive analysis of shot boundary detection methods

Anastasia Antsiferova

This benchmark designed to evaluate different algorithms of the Shot Boundary Detection task. The benchmark provides an opportunity to test your own algorithm for detection of cuts, dissolves, wipes and fades scene transition.

What’s new

- 02.09.2021 Added PyScene-v2 and GStreamer

- 11.09.2021 Added NITS-CV-Lab

- 05.05.2021 main Release

- 16.12.2020 beta-version Release

Key features of the Benchmark

- Extensive and diverse datasets for shot-boundary detection testing:

- 31 videos from 2 datasets: RAI, MSU CC and 19 manually marked up open source videos

- 1287 minutes with 10883 shot transitions

- Cut, dissolve and fade transitions, complex scenes with high spatial and temporal complexity

- Beautiful and easy-interpreting visualizations of most common quality metrics for each category of scene transitions:

- F1 score, Precision and Recall

- Speed performance depending on the resolution of the input video stream

To participate in our benchmark, please follow instructions in the How to submit section.

We appreciate new ideas. Please, write us an e-mail to sbd-benchmark@videoprocessing.ai

Leaderboard

The table below shows a comparison of SBD algorithms by F1 score and speed.

Click on the labels to sort the table.

Performance Charts

Per-video F1 score on different datasets

Choose dataset to see detailed perfomance

Dataset:

Speed/F1 score — resolution dependency

Methodology

Definitions

- Shot — the continuous footage or sequence between two edits or cuts

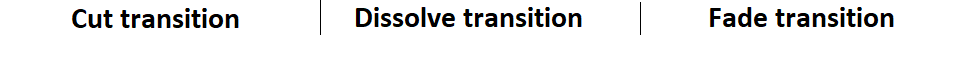

- Cut Transitions — a sudden abrupt transition from one shot to another

- In this case we mark the first frame of a new shot as shot with cut transition

- Cut Transitions — a sudden abrupt transition from one shot to another

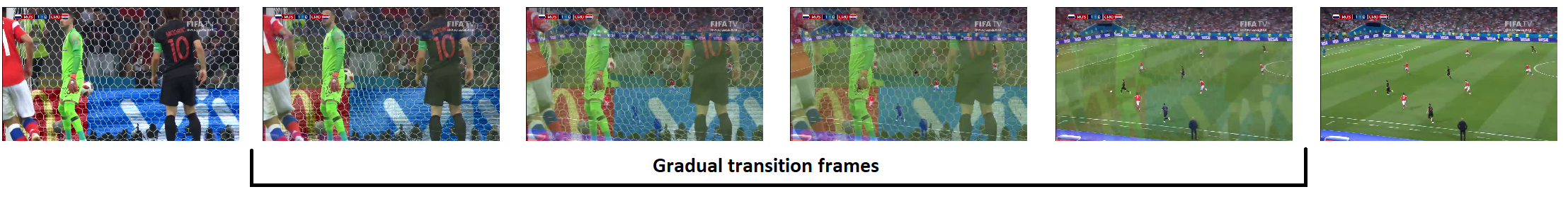

- Gradual Transitions — two shots are combined using chromatic, spatial or spatial-chromatic effects which gradually replace one shot by another

- In this case we mark a continuous series of frames as a gradual transition. Algorithms must detect exactly one frame as a gradual transition from this series

- Fade-out and fade-in Transitions are used to describe a transition to and from a blank image

Video Dataset

Our dataset is constantly being updated. Now it consists of 50 videos with a total duration about 1287 minutes with approximately 10883 scene transitions (7501 abrupt and 3382 gradual).

Our collection contains videos from popular RAI dataset, videos from MSU codecs comparison 2019 and 2020 test sets, and also videos collected from different sources. Our analysis has shown that groud truth in RAI contains imperfections, which we fixed in our collections. Dataset were extended by replacing every cut scene transition in these datasets with fades and dissolves. We manually marked up 19 videos from open-source with total length of 950+ minutes using Yandex.Toloka.

Final dataset contains videos with different resolutions from 360×288 to 1920×1080 in MP4 and MKV formats. Videos include samples in rgb scale or in grayscale with FPS from 23 to 60.

Click to open spatio-temporal distribution and examples of video dataset

Detailed SI-TI of each scene in dataset

Select the area to zoom in

SI-TI metric:

Dataset examples

Evaluation Steps

|

1. Launches

|

We launch your algorithm with given options (or tune them if you would like to) and measure running time.

Important notes:

|

|

|

|

||

| 2. Calculation |

With given shot boundaries we calculate F1 score, Precision and Recall.

Here are the formulas for these metrics:

|

|

|

|

||

| 3. Verification | We send you final results of your algorithm including metrics and visualisation on our video dataset. | |

|

|

||

| 4. Publication | If you give us permission we add results to the main of our benchmark alongside with others algorithms. | |

How to submit

Send us an email to sbd-benchmark@videoprocessing.ai with the following:

- File with .exe extension with your algorithm and instructions to run it from console. When launched from console we expect your file to have following options:

-i— path to input video-o— path to output of your algorithm-t— threshold, if it required in your algorithm

-

If you give us permission to publish results of your algorithm.

-

(Optional) Any addition information about your algorithm.

- (Optional) If you would like us to tune the parameters of your method, write in instruction how can we change these parameters.

You can verify results on RAI dataset.

If you have any suggestions or ideas how to improve our benchmark, please write us an e-mail on sbd-benchmark@videoprocessing.ai.

Cite us

|

@inproceedings{

author={Gushchin, Alexander and Antsiferova, Anastasia and Vatolin, Dmitriy},

title={Shot Boundary Detection Method Based on a New Extensive Dataset and Mixed Features},

year={2021},

month = {11},

pages={188-198},

publisher={GraphiCon},

doi={10.20948/graphicon-2021-3027-188-198},

}

|

Contacts

For questions and propositions, please contact us: sbd-benchmark@videoprocessing.ml

Also you can subscribe to updates on our benchmark:

MSU Video Quality Measurement Tool

Widest Range of Metrics & Formats

- Modern & Classical Metrics SSIM, MS-SSIM, PSNR, VMAF and 10+ more

- Non-reference analysis & video characteristics

Blurring, Blocking, Noise, Scene change detection, NIQE and more

Fastest Video Quality Measurement

- GPU support

Up to 11.7x faster calculation of metrics with GPU - Real-time measure

- Unlimited file size

Main MSU VQMT page on compression.ru

-

MSU Benchmark Collection

- Super-Resolution Quality Metrics Benchmark

- Super-Resolution Quality Metrics Benchmark

- Video Colorization Benchmark

- Video Saliency Prediction Benchmark

- LEHA-CVQAD Video Quality Metrics Benchmark

- Learning-Based Image Compression Benchmark

- Super-Resolution for Video Compression Benchmark

- Defenses for Image Quality Metrics Benchmark

- Deinterlacer Benchmark

- Metrics Robustness Benchmark

- Video Upscalers Benchmark

- Video Deblurring Benchmark

- Video Frame Interpolation Benchmark

- HDR Video Reconstruction Benchmark

- No-Reference Video Quality Metrics Benchmark

- Full-Reference Video Quality Metrics Benchmark

- Video Alignment and Retrieval Benchmark

- Mobile Video Codecs Benchmark

- Video Super-Resolution Benchmark

- Shot Boundary Detection Benchmark

- The VideoMatting Project

- Video Completion

- Codecs Comparisons & Optimization

- VQMT

- MSU Datasets Collection

- Metrics Research

- Video Quality Measurement Tool 3D

- Video Filters

- Other Projects