Evaluation Methodology of MSU HDR Video Reconstruction Benchmark: HLG

Problem Definition

High Dynamic Range (HDR) reconstruction is the process of obtaining HDR photos or videos from their standard dynamic range (SDR) versions. This problem can be approached in different ways, and the purpose of this particular benchmark is to find the best method of HDR video restoration in terms of human evaluation. In the context of video restoration, it is important not only to restore the brightness of the pixels, but also to increase the bit rate and expand the color space.

Why do we need PQ and HLG?

These gamma curves are the most popular and are used in different cases. HLG is used in television broadcasting. PQ for showing movies.

Since these curves are significantly different, they preserve details differently in different brightness levels. In addition, HLG partially repeats the usual Rec.709 gamut, so video recovery for it may be easier.

Please note that we use PQ to calculate some metrics, despite the fact that the purpose of the recovery is HLG. This is done because this gamma curve better reflects a person’s perception of brightness, which results in a greater correlation of metrics with subjective assessments.

At the moment, we have published a study of only the HLG part.

The part with the study of PQ-HDR video reconstruction will be published soon.

Dataset

We present a new private dataset for this comparison. This was done to ensure that neural network methods do not benefit from training on data that could get into our test sample. The authors of the article “How to cheat with metrics in single-image HDR reconstruction” describe this problem in detail. Here we provide the key characteristics of Dataset.

SDR* – tonemapped HDR video

More details about Dataset characteristics and processing you can find in Dataset tab.

Metrics

References:

Evaluation

Splitting into frames

Each SDR video was divided into frames with the command

ffmpeg -i in.mov %04d.png

This was done due to the fact that all (except Maxon at the moment) methods take individual images as input. In all methods, where it is possible to set the option to restore video, it was set.

Video generation

Different methods are trained to give output video frames in different formats. The target format of our benchmark:

- Maximum brightness: 1000 nits

- Color space: Rec.2020

Therefore, for each algorithm the output was processed individually with Davinci Resolve 17 using the built-in Color Space Transform tool.

We use Color Space Transform twice: for encoding with the HLG gamma curve and for encoding with the PQ gamma curve. We use the first one as a preparation for the future subjective comparison. The second one is used for metrics calculations.

We use gamma curve PQ instead of HLG to calculate metrics because it was shown in the studies that this approach allows to calculate metrics that were developed for SDR content in the most correct way.

The details of the output of each method are described below. Each bullet describes the process of converting the luminance and color space to the one we require. As mentioned above, after that we encode the video with two different gamma curves.

-

HDR-CNN, ExpNet, DeepHDR

These methods predict relative HDR luminance values in the range [0,1] with a linear gamma curve and do not change the color space. We use Luminance Mapping to map the value of 1 (which corresponds to 100 nit) to 1000 nit. Since the output of these methods is in 32-bit .exr format, this conversion does not produce banding if the low bits are filled with significant information. We also change the color space, since these methods do not restore Rec.2020. -

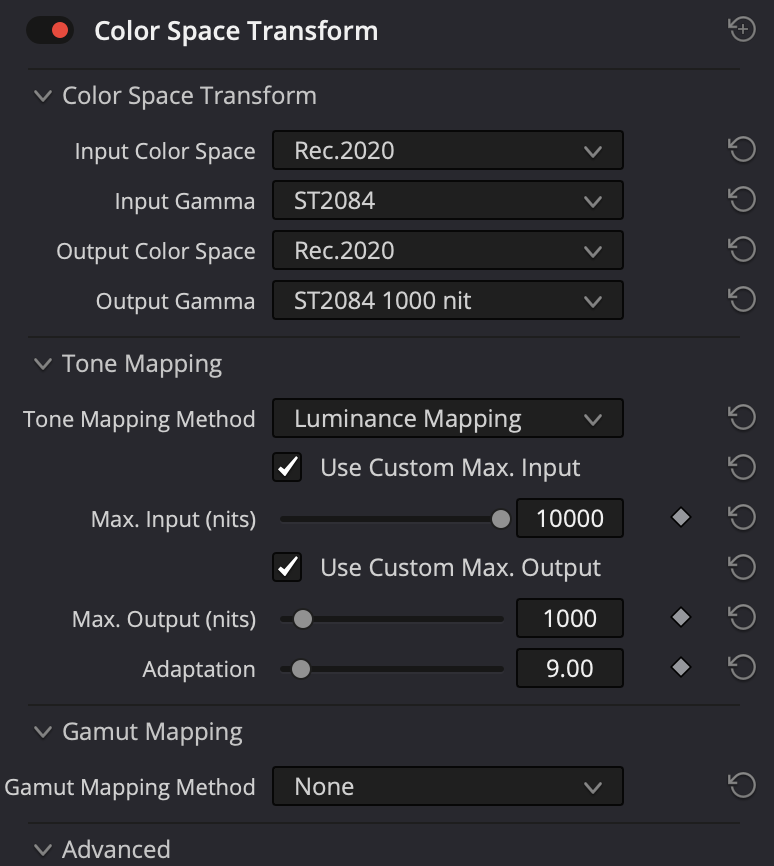

SingleHDR, HDRToolbox*

The output frames of these methods are in linear gamma and have absolute luminance values in the range [0, 10000] nit. So we use Luminance Mapping from 10000 nit to 1000 nit and change the color space to Rec.2020. -

Maxon

For testing the “Highlight Boost” option was set to the maximum value (100), then the standard video export procedure was performed. -

HDRTVNet

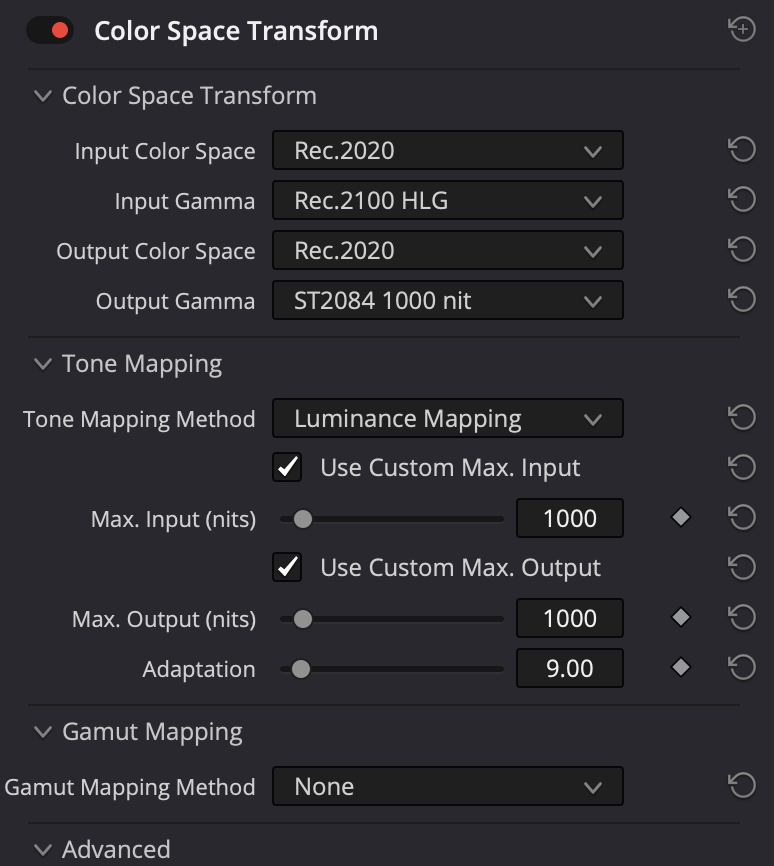

This method is specifically designed to restore a video with a PQ gamma curve. Since the default luminance values for this curve are 10000 nit we used Luminance Mapping from 10000 nit to 1000 nit. We do not change the color space for this method since its output is already in Rec.2020. -

GT

Since the camera we shot the dataset with applies the ITU-R BT.2100 HLG gamma curve, we re-encode the video to “SMPTE ST 2084 1000 nit” in order to calculate the metrics.

On pictures below you can see all Color Space Transform settings:

HDRCNN, DeepHDR, ExpNet

SingleHDR, HDRToolbox*

HDRTVNet

GT

This way we obtain a unified output of all methods (including GT) and then use the MSU VQMT utility and official metrics repositories to calculate the results.

This stage is very important and if the pixel values are interpreted incorrectly, the metric values may be distorted, so you should include the detailed instruction for video rendering in your benchmark submission.

Subjective study

We use MacBook Pro 14 (2021) to show videos to people. Main characteristics:

- 1600 nits

- 14.2-inch

- 3024x1964 resolution

We have collected 2556 votes from 25 people (age 19-45) at the moment. We apply Bradley-Trerry model to collect subjective scores.

HDRToolbox* – a group of methods implemented in HDRToolbox: Akyuz, Kuo, Huo, HuoPhys, KovOliv repo

-

MSU Benchmark Collection

- Super-Resolution Quality Metrics Benchmark

- Super-Resolution Quality Metrics Benchmark

- Video Colorization Benchmark

- Video Saliency Prediction Benchmark

- LEHA-CVQAD Video Quality Metrics Benchmark

- Learning-Based Image Compression Benchmark

- Super-Resolution for Video Compression Benchmark

- Defenses for Image Quality Metrics Benchmark

- Deinterlacer Benchmark

- Metrics Robustness Benchmark

- Video Upscalers Benchmark

- Video Deblurring Benchmark

- Video Frame Interpolation Benchmark

- HDR Video Reconstruction Benchmark

- No-Reference Video Quality Metrics Benchmark

- Full-Reference Video Quality Metrics Benchmark

- Video Alignment and Retrieval Benchmark

- Mobile Video Codecs Benchmark

- Video Super-Resolution Benchmark

- Shot Boundary Detection Benchmark

- The VideoMatting Project

- Video Completion

- Codecs Comparisons & Optimization

- VQMT

- MSU Datasets Collection

- Metrics Research

- Video Quality Measurement Tool 3D

- Video Filters

- Other Projects