Automatic color mismatch estimation in S3D videos using confidence maps

- Author: Stanislav Grokholsky

- Supervisor: dr. Dmitriy Vatolin

Introduction

Usually, the cause of discomfort while watching stereoscopic video is the mismatch between the left and right views. One of the most common types of distortion is color mismatch between stereoscopic views.

We propose a new confidence-map-based algorithm that automatically detects the frames containing color distortions between two views of a stereoscopic video.

Proposed method

Usage of confidence maps allows to detect the unreliable disparity values and therefore decreases the amount of false positives produced by our approach.

The proposed algorithm is a modified baseline model that consists of the following stages:

- Global color correction of the right view

- Matching of the right and left views

- Compensation of the left view to the right based on the results of the comparison

- Cutting off part of the minimum and maximum difference values between the right and compensated left views, filtering views to restore the discarded values

- Calculation the normalized sum of squared difference values

A significant difference from the previous version of the algorithm is the use of confidence maps, that significantly reduces the number of algorithm’s false positives. Additionally, the value filtering algorithm in step 4 of the basic algorithm was modified, and mean square error (MSE) function was replaced with the sum of absolute differences (SAD).

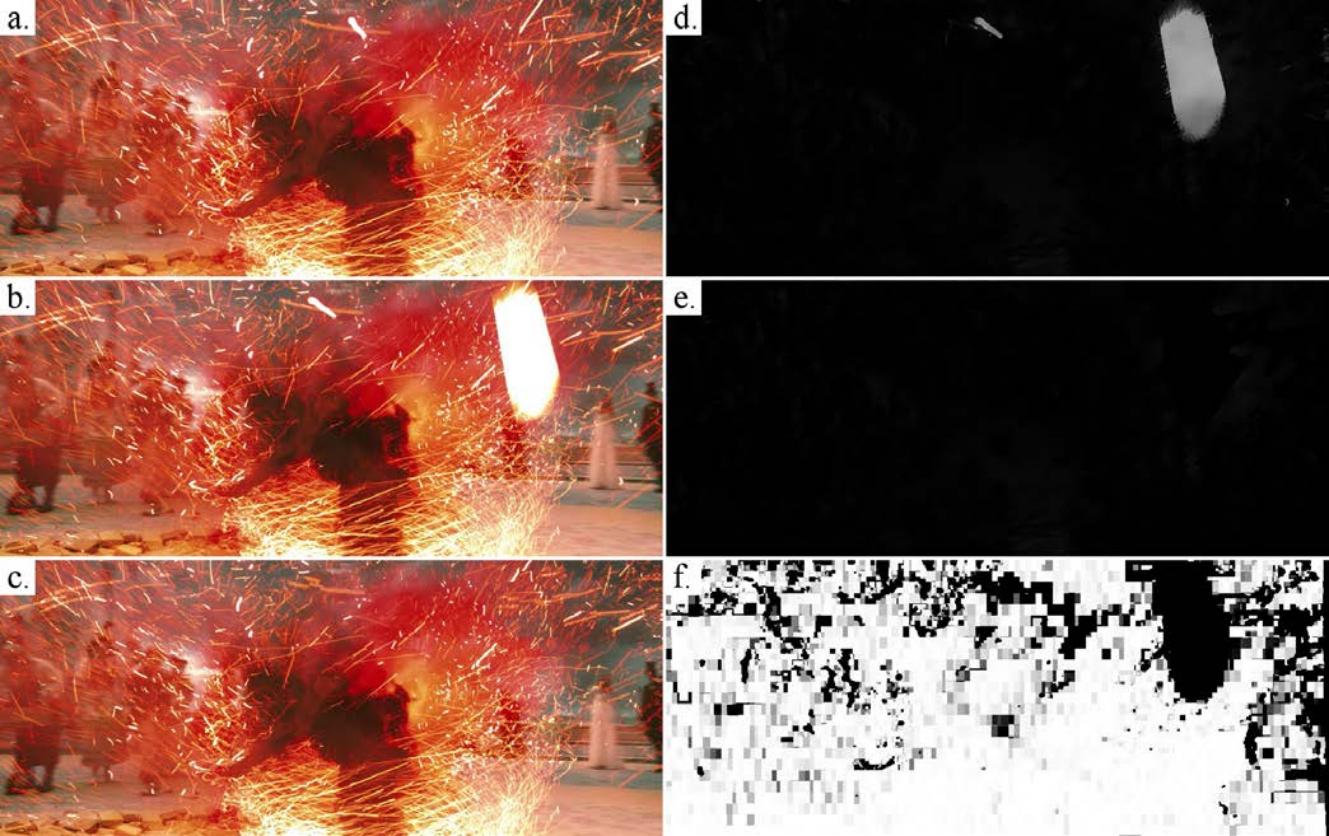

a — left view

b — right view

c — compensated left view

d — visualization of the result of the basic algorithm

e — visualization of the result using confidence

f — visualization of the confidence map

Experiments

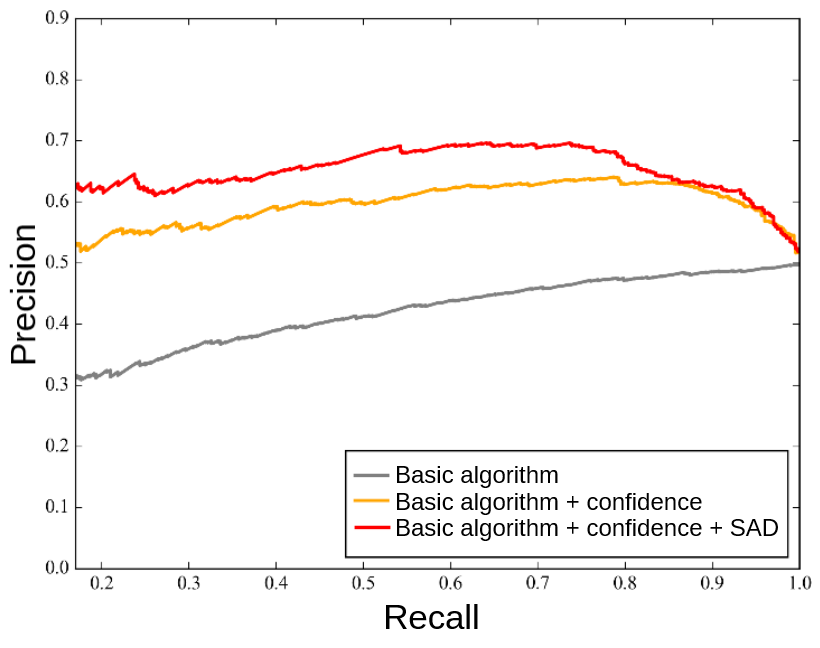

Comparisons were based on samples of scenes from 105 stereoscopic movies. Only scenes, where the values of the baseline algorithm exceeded the preset threshold, were selected. The sample size was 957 frames. Then, all selected frames were manually classified as true positive and false positive.

Comparison of the basic algorithm with various modifications

During testing, the proposed modifications showed better results compared to the basic version of the algorithm.

-

MSU Benchmark Collection

- Super-Resolution Quality Metrics Benchmark

- Super-Resolution Quality Metrics Benchmark

- Video Colorization Benchmark

- Video Saliency Prediction Benchmark

- LEHA-CVQAD Video Quality Metrics Benchmark

- Learning-Based Image Compression Benchmark

- Super-Resolution for Video Compression Benchmark

- Defenses for Image Quality Metrics Benchmark

- Deinterlacer Benchmark

- Metrics Robustness Benchmark

- Video Upscalers Benchmark

- Video Deblurring Benchmark

- Video Frame Interpolation Benchmark

- HDR Video Reconstruction Benchmark

- No-Reference Video Quality Metrics Benchmark

- Full-Reference Video Quality Metrics Benchmark

- Video Alignment and Retrieval Benchmark

- Mobile Video Codecs Benchmark

- Video Super-Resolution Benchmark

- Shot Boundary Detection Benchmark

- The VideoMatting Project

- Video Completion

- Codecs Comparisons & Optimization

- VQMT

- MSU Datasets Collection

- Metrics Research

- Video Quality Measurement Tool 3D

- Video Filters

- Other Projects