Video Quality Measurement Tool 3D

Introduction

VQMT3D (Video Quality Measurement Tool 3D) project was created to improve stereoscopic films. Our aim is to help filmmakers produce high-quality 3D video by finding inexpensive ways to automatically improve 3D film quality. Technical errors made during production of stereo 3D movies are often neglected, but according to our experiments these errors cause viewers to experience headaches.

I will find it very interesting to go through your report in detail, film by film. I had always thought that a major factor holding back the greater success of stereo 3D cinema could be technical problems like those your group has enumerated.

People stop watching 3D movies after experiencing pain just once. Therefore we focus on finding and fixing technical problems that could potentially cause headaches. These are the key contributions of the project:

#1 metric collection for stereo quality assessment

We have developed the largest set of metrics that detects technical problems in stereoscopic movies.

- 18 metrics in total

- 14 metrics, including 5 unique ones, provide quality estimation of 2D-to-3D conversion

#1 stereoscopic movie quality evaluation

A large-scale evaluation of full-length 3D Blu-ray discs with detailed visualizations of technical errors.

- Over 100 analyzed movies

- 9 reports published for industry professionals

- 3,100 pages in total

- 100 stereographers were contacted, 32 of them contributed to the evaluation

#1 study of stereoscopic error influence on viewers

The largest study of reaction to stereoscopic errors. The collected data is crucial for research on visual fatigue.

- Over 300 people participated

- 22,200 discomfort scores acquired

List of Metrics

Our laboratory has been researching stereo quality and stereo artefacts that cause headaches for 11 years. During this time about 20 quality metrics have been created, and some metrics were significantly improved. For example, the metric for detecting swapped channels has gone through 3 generations of improvement (see channel mismatch metric), each time significantly improving accuracy. At the same time, the computational efficiency of the developed metrics remained better than those of our colleagues, which allowed us to actively use them to analyze real movies.

| Metric | Class | Type | Applicable to |

|---|---|---|---|

| 1. Horizontal disparity | Standard | Measurable | Any content |

| 2. Vertical disparity | Standard | Measurable | Any content |

| 3. Scale mismatch | Standard | Measurable | Any content |

| 4. Rotation mismatch | Standard | Measurable | Any content |

| 5. Color mismatch | Standard | Measurable | Any content |

| 6. Sharpness mismatch | Advanced | Measurable | Native 3D capture |

| 7. Stereo window violation | Advanced | Measurable | Any content |

| 8. Crosstalk noticeability | Advanced | Measurable | Any content |

| 9. Depth continuity | Advanced | Measurable | Any content |

| 10. Cardboard effect | Advanced | Qualitative | 2D-to-3D conversion |

| 11. Edge-sharpness mismatch | Unique | Qualitative | 2D-to-3D conversion |

| 12. Channel mismatch | Unique | Qualitative | Any content |

| 13. Temporal asynchrony | Unique | Measurable | Native 3D capture |

| 14. Stuck-to-background objects | Unique | Qualitative | 2D-to-3D conversion |

| 15. Classification by production type | Unique | Qualitative | Any content |

| 16. Comparison with the 2D version | Unique | Qualitative | 2D-to-3D conversion |

| 17. Perspective distortions | Unique | Qualitative | Any content |

| 18. Converged axes | Unique | Qualitative | Any content |

Reports overview

Many stereographers have asked us, for example, if the MSU Scale Mismatch metric value of 4% is high or low for a movie. Originally, we didn’t have a clear answer. So we made a very good attempt to directly connect the values of metrics with perceived discomfort, making, perhaps, the most large-scale study on fragments of real movies with artifacts.

Then we tried to approach this problem from another side. With support from Intel, Cisco and Verizon, we bought more than 150 Blu-ray 3D movies and ran them all through our metrics. This project was complex on both the technical and organizational sides, but as a result we got a clear picture of how different metrics depend on the release date of a movie, its budget and production technology.

Viewer discomfort study

We have conducted a study to determine the amount of pain caused to the spectator by each of the stereoscopic error types. The data obtained from our experiment is the largest among similar experiments worldwide.

| University | #subjects | #subjects per video | Duration | #videos | #videos per test | #scores | Year | 3D tech. | Stimuli |

|---|---|---|---|---|---|---|---|---|---|

| University of Surrey | 30 | 30 | 25 | 40 | 40 | 1200 | 2010 | Autostereo. | Synthetic S3D seq., encoding with different QP |

| Catholic University of Korea | 20 | 20 | 18 | 36 | 36 | 720 | 2011 | Passive | Captured S3D seq., different parallax and motion |

| Telecom Innovation Labs | 24 | 24 | 50 | 64 | 64 | 1536 | 2011 | Active | Open S3D DB, different parallax |

| Philips Research Labs | 24 | 24 | 24 | 7 | 7 | 168 | 2011 | Autostereo. | 3D movie (converted), different parallax |

| Beijing Institute of Ophthal. | 30 | 30 | 30 | 1 | 1 | 30 | 2012 | Active+passive | 3D movie (captured) |

| LUNAM University | 29 | 29 | 28 | 110 | 110 | 3190 | 2012 | Active | Open S3D DB (NAMA3DS1-COSPAD1), different degradations |

| Yonsei University | 28 | 28 | 29 | 110 | 110 | 3080 | 2013 | Passive | Open S3D DB,10 degradation types |

| Acreo Institute | 48 | 28 | 29 | 110 | 110 | 5280 | 2013 | Passive | Open S3D DB,10 degradation types |

| Tampere University of Tech. | 10 | 10 | 45 | 40 | 40 | 400 | 2013 | Passive | Captured S3D seq., encoding with different QP |

| Roma Tre University | 854 | 43-255 | 90 | - | 1 | 854 | 2014 | Passive | Screening after watching S3D movies in cinemas |

| Yonsei University | 56 | 56 | 10.5 | 30.1 | 31 | 1736 | 2014 | Autostereo. | Open S3D DB, different SI and TI |

| University College Dublin | 40 | 4 | 5.5 | 25.11 | 33 | 1320 | 2015 | Active | Open S3D DB, packet losses |

| University of British Columbia | 88 | 88 | 48 | 208 | 208 | 18304 | 2015 | Passive | Open S3D DB, different SI/TI and compression degradations |

| University North | 146 | 18 | 8 | 184 | 26 | 146 | 2016 | Active | Open S3D DB, 22 degradation types (packet losses, encoding, resizing, disparity, brightness, geometry etc.) |

| University of Coimbra | 35 | 2-6 | 7-1 | 184 | 26 | 146 | 2016 | Active | Open S3D DB, 22 degradation types (packet losses, encoding, resizing, disparity, brightness, geometry etc.) |

| Lomonosov MSU | 302 | 370 | 40 | 60 | 60 | 22200 | 2017 | Passive | Scenes from 3D movies (captured), 4 types of S3D distortions, 5 intensities |

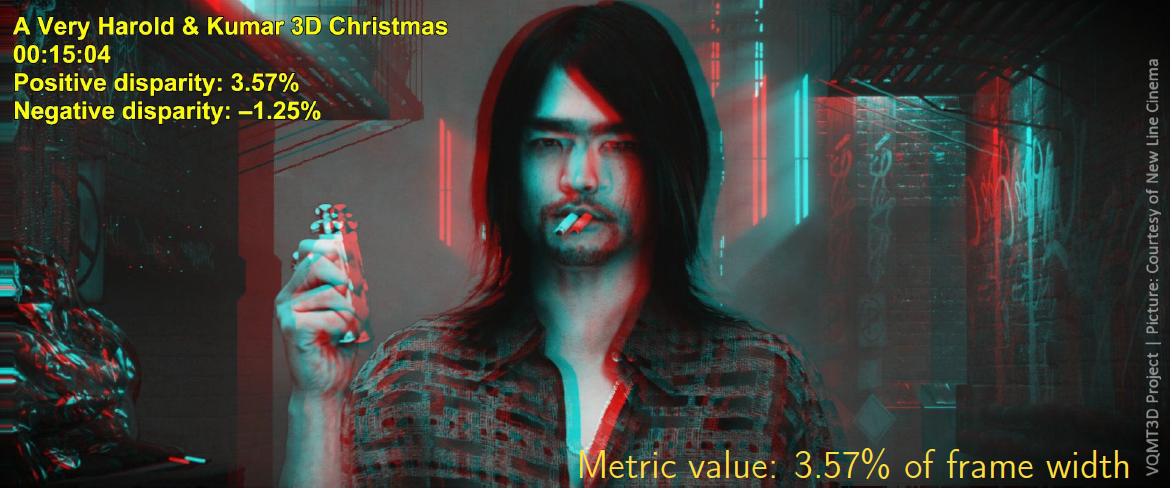

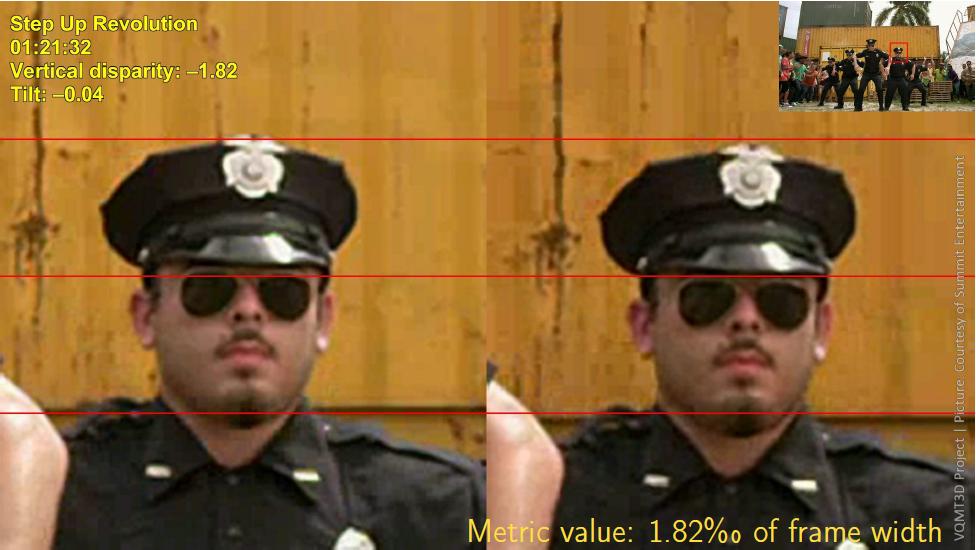

Visualization of stereoscopic errors

Our visualizations distinctly demonstrate the stereoscopic errors. The reports of movie analysis include these visualizations.

An example of detected strong horizontal disparity from A Very Harold & Kumar 3D Christmas

An example of detected vertical disparity from Step Up Revolution

An example of detected color mismatch from The Amazing Spiderman

An example of detected sharpness mismatch from Alice in Wonderland

Publications

If you want to make a reference to this project, please refer to one of the following publications:

- Mikhail Erofeev, Dmitriy Vatolin, Alexander Voronov, Alexey Fedorov,

“Toward an Objective Stereo-Video Quality Metric: Depth Perception of Textured Areas,”

International Conference on 3D Imaging,

2012. doi:10.1109/IC3D.2012.6615120 (download) - Dmitriy Akimov, Alexey Shestov, Alexander Voronov, Dmitriy Vatolin,

“Automatic Left-Right Channel Swap Detection,”

International Conference on 3D Imaging,

2012. doi:10.1109/IC3D.2012.6615126 (download) - Alexander Voronov, Alexey Borisov, Dmitriy Vatolin,

“System for automatic detection of distorted scenes in stereo video,”

International Workshop on Video Processing and Quality Metrics for Consumer Electronic (VPQM-2012),

pp. 138–143, 2012. (download) - Alexander Voronov, Dmitriy Vatolin, Denis Sumin, Vyacheslav Napadovsky, Alexey Borisov,

“Towards Automatic Stereo-video Quality Assessment and Detection of Color and Sharpness Mismatch,”

International Conference on 3D Imaging,

2012. doi:10.1109/IC3D.2012.6615121 (download) - Alexander Voronov, Dmitriy Vatolin, Denis Sumin, Vyacheslav Napadovsky, Alexey Borisov,

“Methodology for stereoscopic motion-picture quality assessment,”

Proc. SPIE 8648, Stereoscopic Displays and Applications XXIV,

vol. 8648, pp. 864810-1–864810-14, 2013. doi:10.1117/12.2008485 (download) - Alexander Bokov, Dmitriy Vatolin, Anton Zachesov, Alexander Belous, Mikhail Erofeev,

“Automatic detection of artifacts in converted S3D video,”

Proc. SPIE 9011, Stereoscopic Displays and Applications XXV (March 6, 2014),

vol. 901112, 2014. doi:10.1117/12.2054330 (download) - Stanislav Dolganov, Mikhail Erofeev, Dmitriy Vatolin, Yury Gitman,

“Detection of stuck-to-background objects in converted S3D movies,”

2015 International Conference on 3D Imaging, IC3D 2015,

2015. doi:10.1109/IC3D.2015.7391839 (download) - Yury Gitman, Can Bal, Mikhail Erofeev, Ankit Jain, Sergey Matyunin, Kyoung-Rok Lee, Alexander Voronov, Jason

Juang, Dmitriy Vatolin, Truong Nguyen,

“Delivering Enhanced 3D Video,”

Intel Technology Journal,

vol. 19, pp. 162–200, 2015. (download) - Dmitriy Vatolin, Alexander Bokov, Mikhail Erofeev, Vyacheslav Napadovsky,

“Trends in S3D-Movie Quality Evaluated on 105 Films Using 10 Metrics,”

Proceedings of Stereoscopic Displays and Applications XXVII,

pp. SDA-439.1–SDA-439.10, 2016. doi:10.2352/ISSN.2470-1173.2016.5.SDA-439 (download) - Alexander Bokov, Sergey Lavrushkin, Mikhail Erofeev, Dmitriy Vatolin, Alexey Fedorov,

“Toward fully automatic channel-mismatch detection and discomfort prediction for S3D video,”

2016 International Conference on 3D Imaging (IC3D),

2016. doi:10.1109/IC3D.2016.7823462 (download) - Dmitriy Vatolin, Sergey Lavrushkin,

“Investigating and predicting the perceptibility protect of channel mismatch in stereoscopic video,”

Moscow University Computational Mathematics and Cybernetics,

vol. 40, pp. 185–191, 2016. doi:10.3103/s0278641916040075 (download) - Anastasia Antsiferova, Dmitriy Vatolin,

“The influence of 3D video artifacts on discomfort of 302 viewers,”

2017 International Conference on 3D Immersion (IC3D),

2017. doi:10.1109/IC3D.2017.8251897 (download) - Dmitriy Vatolin, Alexander Bokov,

“Sharpness Mismatch and 6 Other Stereoscopic Artifacts Measured on 10 Chinese S3D Movies,”

Proceedings of Stereoscopic Displays and Applications XXVIII,

pp. 137–144, 2017. doi:10.2352/ISSN.2470-1173.2017.5.SDA-340 (download) - Sergey Lavrushkin, Vitaliy Lyudvichenko, Dmitriy Vatolin,

“Local Method of Color-Difference Correction Between Stereoscopic-Video Views,”

Proceedings of the 2018 3DTV Conference: The True Vision - Capture, Transmission and Display of 3D Video (3DTV-CON),

2018. doi:10.1109/3DTV.2018.8478453 (download) - Aidar Khatiullin, Mikhail Erofeev, Dmitriy Vatolin,

“Fast Occlusion Filling Method For Multiview Video Generation,”

Proceedings of the 2018 3DTV Conference: The True Vision - Capture, Transmission and Display of 3D Video (3DTV-CON),

2018. doi:10.1109/3DTV.2018.8478562 (download) - Sergey Lavrushkin, Dmitriy Vatolin,

“Channel-Mismatch Detection Algorithm for Stereoscopic Video Using Convolutional Neural Network,”

Proceedings of the 2018 3DTV Conference: The True Vision - Capture, Transmission and Display of 3D Video (3DTV-CON),

2018. doi:10.1109/3DTV.2018.8478542 (download) - Alexander Ploshkin, Dmitriy Vatolin,

“Accurate Method of Temporal Shift Estimation for 3D Video,”

Proceedings of the 2018 3DTV Conference: The True Vision - Capture, Transmission and Display of 3D Video (3DTV-CON),

2018. doi:10.1109/3DTV.2018.8478431 (download) - Sergey Lavrushkin, Konstantin Kozhemyakov, Dmitriy Vatolin,

“Neural-Network-Based Detection Methods for Color, Sharpness, and Geometry Artifacts in Stereoscopic and VR180 Videos,”

International Conference on 3D Immersion (IC3D),

2020. doi:10.1109/IC3D51119.2020.9376385 (download) - Kirill Malyshev, Sergey Lavrushkin, Dmitriy Vatolin,

“Stereoscopic Dataset from A Video Game: Detecting Converged Axes and Perspective Distortions in S3D Videos,”

International Conference on 3D Immersion (IC3D),

2020. doi:10.1109/IC3D51119.2020.9376375 (download) - Lavrushkin Sergey, Molodetskikh Ivan, Kozhemyakov Konstantin, Vatolin Dmitriy,

“Stereoscopic quality assessment of 1,000 VR180 videos using 8 metrics,”

Electronic Imaging, 3D Measurement and Data Processing,

2021. doi:10.2352/issn.2470-1173.2021.2.sda-350 (download)

Acknowledgments

We wish to acknowledge the help provided by CMC Faculty of Lomonosov

Moscow State University.

CMC Faculty provided us with extra computational capabilities and disk

space which was needed for our research.

This work is partially supported by the Intel/Cisco Video Aware Wireless Network (VAWN) Program and by grant 10-01-00697a from the Russian Foundation for Basic Research.

Our project “Development of a system for automatic objective quality assessment and correction of stereoscopic video and video in VR180 format” was supported under the START program of State Fund for Support of Small Enterprises in the Scientific-Technical Fields.

Invitation to the Project

We invite stereographers, researchers and proofreaders to join our 3D-film analysis project. We are open for collaboration and appreciate your ideas and contributions. We love to receive feedback and learn from the experience of people in the film-production industry.

If you would like to participate, please contact us: 3dmovietest@graphics.cs.msu.ru

Contacts

For questions and propositions, please contact us: 3dmovietest@graphics.cs.msu.ru

-

MSU Benchmark Collection

- Learning-Based Image Compression Benchmark

- Super-Resolution for Video Compression Benchmark

- Video Colorization Benchmark

- Defenses for Image Quality Metrics Benchmark

- Super-Resolution Quality Metrics Benchmark

- Deinterlacer Benchmark

- Video Saliency Prediction Benchmark

- Metrics Robustness Benchmark

- Video Upscalers Benchmark

- Video Deblurring Benchmark

- Video Frame Interpolation Benchmark

- HDR Video Reconstruction Benchmark

- No-Reference Video Quality Metrics Benchmark

- Full-Reference Video Quality Metrics Benchmark

- Video Alignment and Retrieval Benchmark

- Mobile Video Codecs Benchmark

- Video Super-Resolution Benchmark

- Shot Boundary Detection Benchmark

- The VideoMatting Project

- Video Completion

- Codecs Comparisons & Optimization

- VQMT

- MSU Datasets Collection

- Metrics Research

- Video Quality Measurement Tool 3D

- Video Filters

- Other Projects